The first multimeters were usually 20,000 ohms/volt. This was a measure of how much loading the meter presented to the circuit under test. For each volt full scale selected, the meter represented a load of 20,000 ohms. The 15 volt scale would present a load of 2.1 Megs. The 3 volt scale would be 60,000 ohms.

The VTVM used a tube in a bridge circuit to reduce loading. Most of them had a 10 Meg resistor in the probe, which raised the input impedance almost to a negligible point.

Today's DVM's can do the same thing. You just don't get a cool meter pointer to look at.

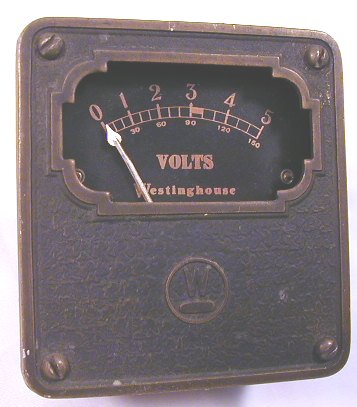

For the record, this picture was taken with 100 foot-candles of light.